We have released Neural Network Libraries v1.21.0!

Various updates have been made with regards to XAI (eXplainable AI) and Fairness!

We have also made some important changes, such as making inplace option obsolete, adding a quantized tflite converter, optimizing PF.sync_batch_normalization, and many more!

Spotlight

Various XAI/Fairness updates

[XAI] SHAP

We have added SHAP (SHapley Additive exPlanation), which is an approach to explain the output of any machine learning model using game theory. It links optimal credit allocation with local explanations using the classic Shapley values from game theory and their related extensions.

[XAI] Influence Functions

We have also added influence functions that perform data cleansing by Understanding Black-box Predictions.

[Fairness] Gender Bias Mitigation

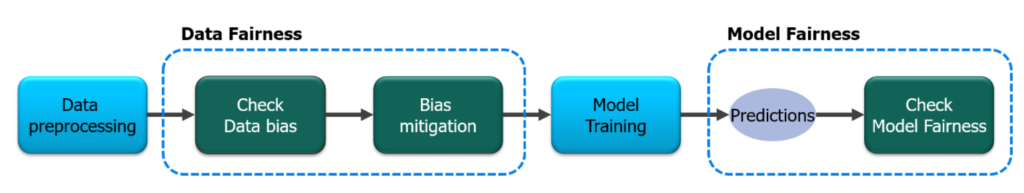

We have added a colab interactive demo of introduction of fairness workflow tutorial. In this tutorial, we have tried to give a gentle introduction to gender bias detection and mitigation to enthusiasts of Responsible AI. There are many ways to detect and mitigate bias, and this tutorial illustrates one simple method to detect bias and mitigate it with reweighing algorithm.

- Interactive demo

| Name | Notebook | Task |

|---|---|---|

| Introduction of Fairness Workflow Tutorial | Dataset/Model Bias Check and Mitigation by Reweighing |

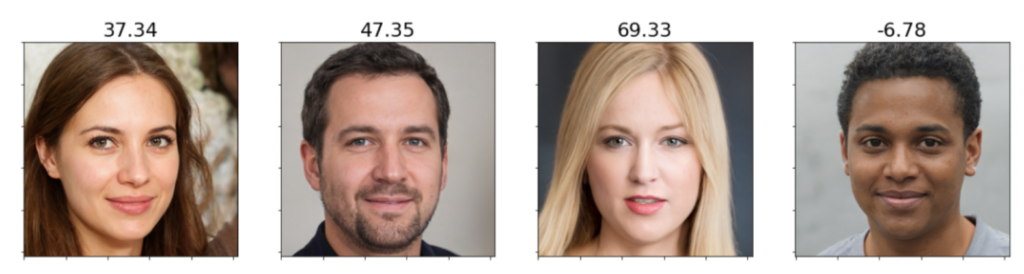

[Fairness] Facial evaluation for skin color

We have also added a colab interactive demo of facial evaluation for skin colors using Individual Typology Angle (ITA), which represents skin color.

Figure: Individual Typology Angle (ITA) scores with different faces by the Masked Images version

- Interactive demo

| Name | Notebook | Task |

|---|---|---|

| Skin Color (Masked Images) | Facial evaluation for skin color |

Make inplace option in most of Function operations obsolete / GPU

In-place operations such as F.add_scalar(x, y, inplace=True) no longer perform computation in-place. The option inplace=True will be simply ignored by this change.

Add a quantized tflite converter to export nnp to int8 tflite

We have optimized our tflite converter, along with a new quantized tflite converter. We have also handled autopep8 encode error.

Optimize PF.sync_batch_normalization

We have optimized the implementation of PF.sync_batch_normalization, which synchronizes the statistics computed between the GPUs during multi-GPU distributed training! Compared to previous cuDNN implementation, it is up to 42 times faster for forward computation and up to 110 times faster for backward computation.

Boolean Indexing Functions / GPU

We have added boolean indexing functions BoolGather, BoolScatter, and BoolFill.

The first two are forward/backward correspondences and typically used as following:

import numpy as np

import nnabla as nn

import nnabla.functions as F

nn.set_auto_forward(True)

input0 = nn.Variable.from_numpy_array([[1, 2], [3, 4], [5, 6]])

mask = nn.Variable.from_numpy_array([1, 0, 1])

output0 = F.bool_gather(input0, mask)

input1 = output0 + 10 # do whatever for reduced array

output1 = F.bool_scatter(input1, mask)

print(output1.d) # [[11, 12], [0, 0], [15, 16]]

BoolFill can be inplaced as:

import numpy as np

import nnabla as nn

import nnabla.functions as F

nn.set_auto_forward(True)

input = nn.Variable.from_numpy_array([[np.inf, 2], [3, np.nan]])

mask = nn.Variable.from_numpy_array([[1, 0], [0, 1]])

output = input.bool_fill(mask, 0)

print(output.d) # inf/nan are replaced with 0, and input.d == output.d

Layers

- Support p=0 for dropout function / GPU

- Ignore label less than 0 in cross entropy / GPU

- add dot & matmul operator

- Remove ReLUCudaCudnn / GPU

- Add roi_align function and tests / GPU

- Higher-order gradients for normalization functions / GPU

- Modify cumprod backward to deal with zero input / GPU

- Support variadic inputs of nbla::functions::concatenate()

- Double backward for cumsum

Build

- Fix APT_OPTS for runtime dockerfiles / GPU

- Skip some tests on 32bit system.

- CI: Restructure dockerfile directory

- Fix build without http_proxy

- temporarily comment out a test which cause randomly crash.

- change image_utils imread behavior for pillow backend compatibility

- Install nnabla_converter to py38 docker images

- CI: Support Nvidia Compatible Driver for CUDA11 docker images

Format Converter

- fix: update onnx_opset_tensorrt, use opset11 version

- support ceil_mode for onnx exporter

- support weightnormalization exporter

- support nnabla to export weightstandardization & instancenormalization & layernormalization in onnx

- support nnabla to export spectralnorm in onnx

Utilities

- feat: reduce create cache log and print every 5%

- Support multiple callback

- Implement custom module nvml to replace pynvml / GPU

- refactor:remove redundant image backend switch

- Inplace methods reverts to using inplace=False

Examples

C Runtime

- feat: Sync api level version from nnabla

- Fix build without http_proxy

- Sync api level version from nnabla

- Allow additional options to commands that use network

Bugfix

- fix status 9 error

- fix race condition for data iterator with cache dataset

- Fix duplicate calls in forward_all

- Properly close file handlers at exception

- Bugfix: fix nn.grad for floating variables

- fix parameter error for graph viewer

- fix indexing leaf variable problem

- Add unitest for graph/parameters issue

- Re-enable the cudnn convolution metrics caching.

- Fix multithread cudnn handle sharing problem